Difference between revisions of "Vicon Walkthrough"

Jump to navigation

Jump to search

m (→Dynamic calibration) |

(→Static calibration) |

||

| Line 109: | Line 109: | ||

'''Steps''' | '''Steps''' | ||

# Place the L-Frame calibration object at the center of the capture volume or wherever you'd like the origin of your floor to be.<br>The side of the L-Frame with two markers at the end is the X-axis, and the Z-axis is perpendicular to the floor. | # Place the L-Frame calibration object at the center of the capture volume or wherever you'd like the origin of your floor to be.<br>The side of the L-Frame with two markers at the end is the X-axis, and the Z-axis is perpendicular to the floor. | ||

| − | # Click '''Track L-Frame''' <br>[[image: Vicon11.gif | + | # Click '''Track L-Frame''' <br>[[image: Vicon11.gif]]<br><br> |

| − | # After a few seconds, click '''Set origin'''<br>[[image: Vicon9.gif | + | # After a few seconds, click '''Set origin'''<br>[[image: Vicon9.gif]]<br><br> |

# Now you can save out your calibration | # Now you can save out your calibration | ||

Revision as of 00:41, 11 November 2011

This page is still under construction

Contents

Vicon Hardware

Workflow

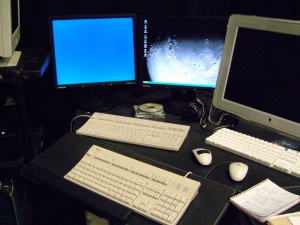

- Turn on two Windows machines in Room 3850

- sr-00153

- sr-00151

- Turn on the Datastation.

- Run one application each on both machines:

- Verify that the Vicon DataStation is connected

- Position cameras to cover desired capture volume while maintaining appropriate overlapping regions. You may skip this step if cameras are already positioned correctly (they usually are).

- Set up the VICONiQ2 hierarchy to organize your motion capture data

- Turn on the projector using a remote control.

Drag and drop VICONiQ2 window to the far left side of the screen to see it on the projector.

If you can't see the Desktop on the projector screen, switch to 1 (as shown below) to connect the Datastation PC with the projector.

- Calibrate the system

- Attach the desired marker set to subject. (please refer to "Where to place markers" on p.28 of VICON System Manual)

- Capturing a range of motion (ROM) trial

- Create a calibrated subject

- Capture your data

- Process and edit the data

- Save and export the data to other Vicon or third party applications

- Don't forget to turn off the projector and the Datastation before you leave!

Connecting to the Vicon DataStation

Once the VCONiQ2 program is running on sr-00151, verify that the Vicon DataStation is connected to the software. To connect to the Vicon datastation, follow these steps;

- Click either the Capture or the Calibrate tab.

- On the lower left hand side of the window, find the two round indicators that are located next to each other. One indicator will either be labelled as DataStation Connected or DataStation Connected; the other will be labelled as Realtime Connected" or Realtime Not Connecting".

- If the labels indicate the you are not connected to the DataSation, click the button with two triangles on the lower right hand side of the window.

Data Organization

- Go to Data Management

- If you are not working with an existing database, create a new database by following these steps:

- Click Eclipse > New Database....

- Select a location and enter the name for your new database.

- Select Generic Template .eni

- Click Create

- To create a new subject, click

- To create a new session in the subject, click

- A new trial will be made automatically when you capture. The system will automatically name trials unless you name them. It will increment a number at the end of the take name (Trial001, Trial002, and so on).

- Icons that appear in the hierarchy

Calibration

Summary

- Calibration determines each camera's location and orientation of the 3D Workspace so that the location of each marker can be obtained.

- Calibration should be performed frequently.

- Two types of calibration must be done:

If you reposition even one camera, follow these steps before calibration:

- Select Setup

- Select Mask tab on the right

- Click Start Recording Background

- Click Stop Recording Background

Dynamic calibration

Summary

- Calculates the relative positions and orientations of the cameras

- Linearises the cameras

- Tool required: 240mm calibration wand

Steps

- Click Calibrate

- Change the viewing pane to Camera (right below Setup)

- Click connect/disconnect button (two red arrow heads pointing at each other) to connect to the Datastation. DataStation Connected and RealTime Connected icons should turn green from blue. If it turns yellow, check if the Datastation is turned on.

- Make sure 240_mm_Wand is selected

- Click on the first camera (1) and Shift-click on the last (12). This should show small camera views for all of the cameras

- Click Start Wand Wave

- Move the calibration wand throughout the whole volume (important!)

- Start on the outside of the volume, facing inwards

- Wave the wand in a vertical figure of eight in front of you while moving around the outside of the volume

- After a circuit, move inwards and continue spiralling

- Finish by crouching in the center, waving the wand in circles near the floor and spiralling up to head height

Calibration screen-shot (you should be able to see it on the projector during the calibration)

Camera positions

- Click Stop Wand Wave

- The calibration results will be shown in Status Report (Awesome! is the best result, followed by Excellent!, Good, and then Bad). All of the cameras should be in the Excellent or better.

Static calibration

Summary

- Calculates the origin or center of the capture volume and determines the orientation of the 3D Workspace

- Tool required: L-Frame calibration object

Steps

- Place the L-Frame calibration object at the center of the capture volume or wherever you'd like the origin of your floor to be.

The side of the L-Frame with two markers at the end is the X-axis, and the Z-axis is perpendicular to the floor. - Click Track L-Frame

- After a few seconds, click Set origin

- Now you can save out your calibration

Capturing a range of motion (ROM) trial

Summary

- A range of motion (ROM) trial is used to change the proportions of a basic/generic skeleton template (VST) that has generic marker positions to the physical dimensions of the captured subject (VSK)

- Basically, VSK file is a customized VST file. You can use one VST to create any number of customized VSK files; one VST can be used to create different VSKs to match different subjects' body proportions. The VSK file will make labeling much easier.

- VST files can be created using VICONiQ's Modeling mode. How to create a VST file

Steps

- Go back to Data Management and make sure you are in the session you want to be.

- Select Capture and then Live 3D workspace

- Type in a file name

- Select Type 2D Camera Data but not Realtime Output

- Click Start to start capturing a subject's movement

- Have the subject start with a T-pose, rotate all joints (wrist, lower and upper arms, shoulders, back, neck, head, etc.) one by one, and end with a T-pose.

- Click Stop to finish capturing

- Click Load Into Post; doing so will automatically select Post Processing mode

Create a calibrated subject

Data Reconstruction

Summary

- Reconstruction turns the 2D camera data from all cameras into one 3D data file

- Poor calibration causes a poor 3D data

Steps

- Select Pipeline tab. If you don't see the tab, select Post Processing

- Double click on CircleFit, Reconstruct, Trajectory option. If the option is not available, click on the add button

and double click on the CircleFit, Reconstruct, Trajectory option.

and double click on the CircleFit, Reconstruct, Trajectory option. - Set the two parameters as follows:

- Then, select Show Advanced Parameters from the pop-up window. This should show additional parameters. Try the following:

- Right-click on CircleFit, Reconstruct, Trajectory and select Run Selected Op

- Now you can see the markers in motion through the timeline.

Labelling

Summary

- Labeling is basically the assigning of specific names to specific markers; you need to label markers since VICONiQ doesn't know which marker belongs to which part of a human body

- If the movement is simple, you usually need to label markers in a single frame

- There are 4 different modes for labelling, but you need to know only two:

- Single allows you to assign just one marker at a time

- Sequence allows you to sequentially select markers and identify which ones they are

- There are also 4 different rules for how the label is assigned to the marker through time:

- Forward assigns the markers from the current frame

- Backward is the opposite of Forward

- Whole assigns the entire markers as long as they exist. Useful if markers don't swap or get confused with one another

- Range labels a range of markers

Steps

- Select Post Processing and select Subjects

- Click Create Vicon Skeleton Template (VST)

- Select a VST file (for a full-body capture, choose iQ_HumanRTKM_V1.vst under C:\Program Files\Vicon\Models\VICONiQ2.0) and type in a name

- Select Labelling and click Sequence and Whole

- In 3D Workspace, manually label markers as follows (for the labels, please refer to "Where to place markers" on p.28 of VICON System Manual)

- Select a label (LFHD, RFHD, etc.) from the list

- Click a corresponding marker (white dot) in 3D Workspace

- Go to Pipeline

- Make sure to set the current frame to the frame that was labeled in the previous step.

- Right-click on Autolabel Range of Motion and select Run Selected Op (if the operation is not available in the list, add the operation by clicking

) This will auto-label the rest of the frames in the ROM trial.

) This will auto-label the rest of the frames in the ROM trial. - Also run Delete Unlabelled Trajectories

- You can check trajectories continuity by looking Continuity Chart

Subject calibration

Steps

- Make sure you are in a T-pose frame. Select Subjects tab

- Make sure that the Active Subjects box is on Edit mode.

- Select G button and then T button. Now you should see a blue T on the timeline.

- Click a small button next to Calibrate Subject button.

- In the option window, set Calibration Quality to Medium. Also, if you set Autosave to Yes, then VSK will be saved automatically; otherwise, export VSK manually later.

- Finally, click Calibrate Subject. You should see your "sticks and boxes" subject model (if you don't see this, go to the View Options tab on the left and make sure the subjects box is checked).

Capture

Summary

- Capture is the same as what you did for the T-pose.

- Make sure that the range of motion (ROM) trial is in a good quality. If not, re-calibrate the system and re-capture a ROM trial.

Steps

- Go back to Data Management and make sure you are in the session you want to be.

- Select Capture and then Live 3D workspace

- Type in a file name

- Select Type 2D Camera Data but not Realtime Output

- Click Start to start capturing a subject's movement

- Click Stop to finish capturing

- Click Load Into Post; doing so will automatically select Post Processing mode

Process and edit the data

Summary

- At this point, you should have the VSK file created for the subject, so you do not need to manually label all the markers for each trial.

Steps

- Select Pipeline tab. If you don't see the tab, select Post Processing

- Double click on Load Subject(s) option. Check if the VSK file that you created in the subject calibration step is selected as Input File Name(s). If not, click on the browse button and find the VSK file. Set Yes to Use File Name option.

- Right-click on Load Subject(s) and select Run Selected Op.

- Double click on CircleFit, Reconstruct, Trajectory option. If the option is not available, click on the add button

and double click on the CircleFit, Reconstruct, Trajectory option.

and double click on the CircleFit, Reconstruct, Trajectory option. - Set the two parameters as follows:

- Min. Cameras to Start Trajectory: 2

- Min. Cameras to Reconstruct: 2

- Then, select Show Advanced Parameters from the pop-up window. This should show additional parameters. Try the following:

- Delete single frame trajectories: Yes

- Min circle diameter: 1

- Max circle diameter: 30 to 100

- Circle fitting error: 1.3 to 1.5

- Minimum circle fitting quality: 0.45 to 0.5

- Right-click on CircleFit, Reconstruct, Trajectory and select Run Selected Op

- Also right-click on Trajectory Labeller and select Run Selected Op. This automatically labels all markers by using the VSK file.

- Check if markers are labeled correctly. If not, correct labels using Single and Forward under Labelling. Continuity Chart is again helpful.

- Go to Pipeline and run the following operations in the order by right-click and selecting Run Selected Op

- Trim Tails will remove unreliable trajectory data

- Filter Using a Butterworth Filter will remove frequencies that are inconsistent with the markers

- Fill Gaps using Splines

- Kinematic Fit will fit the kinematic skeleton into the markers using the VSK file

- Fill Gaps using Kinematic Model will filing in missing data from occluded markers

- Delete Unlabelled Trajectories (note: run this operation only if you don't have any mislabeled trajectories, that is, Trajectory Labeller operation has been successful)

Export the data

Steps

- Select Pipeline tab under Post Processing

- Run one of the following operations by right-click on the operation and selecting Run Selected Op:

- Export data to C3D file for use with MotionBuilder

- Export motion to V-file for use with Maya or MotionBuilder

- Export data to CSM file; doing so will produce xyz marker coordinate values and export to a single file.

- Export data to CSV file; doing so will produce all joint parameters and gait parameters. You can directly load them into Excel.

- Note: if the operation you need to perform is not available, click on the add button

to add the operation

to add the operation

Create a VST file

UNDER CONSTRUCTION

Summary

- VST files can be created using VICONiQ's Modeling mode

- You are creating bones and joints by creating segments.

Steps

- Capture a trial.

- After selecting Load Into Post, go to Pipeline and run CircleFit, Reconstruct, Trajectory and Export data to C3D file

- Go to Modeling

- Go to File and select Clear

- Import C3D file which you exported

- Choose No for T-Pose, and enter a single frame #.

- On the Outlier tab, click Root

- Click Manipulate and move a small box with three arrows. This is your first joint, so place it at an appropriate position. You can click-drag the screen to change the view.

- Make a segment that connects two joints by doing the following:

- Click Select and click the joint (Joint A)

- Click Segment and click anywhere to create a new joint (Joint B)

- Click off Segment. Now you see a new segment that connects Joint A to Joint B.

- Click Manipulate and move Joint B to an appropriate place. You may also want to change the joint type (Free, Hinge, Rigid, etc.)

- Repeat the previous step to create all the remaining segments

- You also need to create markers:

- Click Select and then Marker

- Click on the screen to create a marker. You'll see all newly created markers under ModelMarker of Outlier. You should always rename markers so it is easy to identify them later.

- Click off Marker

- Click Manupulate and marry the marker you created with the real marker captured by the cameras.

- Repeat the previous step to create all the remaining markers.

- Go to File, select Save as and save your VST under the correct directory.

TIPS

- Use Alt key and mouse to rotate the view

Glossary

- Calibration: Method used to measure the relative locations of all the cameras in the motion

- Capture Volume: The actual amount of space in which you are able to capture data.

- Datastation: Hardware which captures all camera and analogue data. This provides the link between the cameras and analogue devices and the PC running the Workstation software.

- Subject: Person or object whose motion is to be captured

- Trial: An act of data capture. Also refers to resulting C3D file.

- Wand: Device used for dynamic calibration.

- Workspace: 3D space in which motion will be captured

- Workstation: Software that controls the Datastation. This is installed on a PC linked to the Datastation.

Related Documentation

Exact same version (VICONiQ2.0)

- Motion Capture Pipeline, Index - Oregon State University

- Motion Capture/Animation - Irina Skvortsova, Software Technology Lab Queen's University

- Subject Calibration and Automatic Labelling - Christoph Bregler, New York University

Newer version

- Motion Capture Path 1: Setup & Calibration - University of Texas at Dallas

- Recapturing Life - Subject Calibration and Automatic Labelling - Chris Bregler, Computer Sciences/Courant Institute cs.nyu.edu/csweb and Jean-Marc Gauthier, ITP/Tisch itp.nyu.edu

Older version

- Offline capturing of full-body motion with the Vicon system - University of Toronto Dynamic Graphics Project

- Manual Vicon Workstation - University of Twente Computer Science

- A real-time motion capture application for virtual-try-on - University of Geneva MIRALab

Others

- Real-time Motion Capture - University of Utah Computer Science

- Importing large .CSV files into Excel - Vicon

- Marker placement guide - University of Twente Computer Science

- Getting Data from Gait Traces - Tim Wrigley, University of Melbourne

- Recapturing Life - Chris Bregler, Computer Sciences/Courant Institute cs.nyu.edu/csweb and Jean-Marc Gauthier, ITP/Tisch itp.nyu.edu

- Cleaning Data, Working with Motion Builder and Maya - Ying Wei, Ohio State University, Computer Science and Engineering

- ACCAD Motion Capture Lab - ACCAD, Ohio State University

- Mocap Vicon Workflow - INITION

Papers

- Dorfmuller-Ulhaas, K. 2003. Robust Optical User Motion Tracking using a Kalman Filter. In: Proceedings of VRST 2003 Conference.

- McKenna, S.J., Jabri, S., Duric, Z., Rosenfeld, A., and Wechsler, H. Tracking Groups of People, Computer Vision and Image Understanding, vol. 80, no. 1, pp. 42-56, Oct. 2000.