Kinect

Contents

Kinect libraries and APIs

Several different libraries exist for the Kinect. Some of these have been installed in the Blackbox computers.

- Microsoft SDK: Microsoft's official SDK for the Kinect is installed on the only Windows 7 machine in the Blackbox and is clearly labeled as such.

- OpenNI: The OpenNI libary

Other libraries (which have not been installed in the Blackbox machines yet) include the following:

- libfreenect

- iPi

One word of caution: some of these libraries cannot be installed on the same macine. For instance, the OpenNI library should not be installed along with the Microsoft Kinect SDK.

Using Kinect motion data

You can export Kinect motion capture data into BVH format, a standard motion data format that can be imported into, say, Credo Interactive's DanceForms 2.0 choreography and animation software. The software is available on http://tech.integrate.biz/kinect_mocap.htm

Errors in using the Kinect for motion tracking

Three kinds of errors that can arise from using the Kinect for motion tracking: temporal aliasing, spatial resolution errors, and occlusion errors.

Temporal aliasing

The Nyquist theorem states that given a sampling rate f, any frequency above what is known as the Nyquist frequency (which is f/2) will not be reconstructed properly. What does this mean in terms of motion capture? This means that movement that is very fast and nonlinear may suffer from aliasing. Two kinds of nonlinear motion are affected by temporal aliasing:

- Periodic motion. Examples of period motion include: an arm being swung around in a wide circle; jumping up and down; a finger tracing a sinusoidal pattern in the air; a repeated dabbing motion.

- Non-periodic, non-monotonic. Examples include: a single, martial-arts style punch in the air; starting from the time the hand extends from the chest and finishing with the arm near the chest again; tracing random, curvy patterns in the air with your nose

We consider each of these two cases in turn.

Temporal aliasing of periodic motion

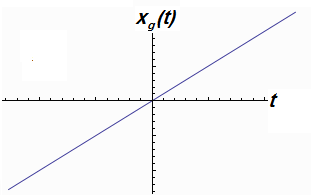

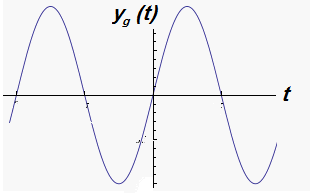

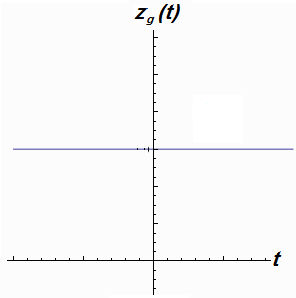

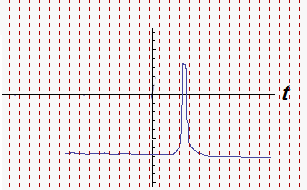

Consider the movement of a single point on the human body through 3D space, measured with reference to a 3D coordinate system that is fixed to the room. Let xg(t), yg(t), and zg(t) represent the true (ground truth) values of the position of the limb, as measured within that coordinate system, at time t. We can plot the position of that point along each of three axes. For the purposes of illustration, imagine the position of the tip of a finger as it steadily traces a sine wave along a plane perpendicular to the z axis, moving from "left to right", that is, from one end of the x axis to the other. If we assume that the coordinate position (0, 0, 0) is located near the finger of the mover, the motion can be plotted along the three reference axes, and might look something like this:

The question is: at what point would this motion be erroneously reconstructed from Kinect sampling because of temporal aliasing? For this particular example, since the x and z components of the motion will always be reconstructed correctly, we focus on the reconstruction of the y component. (It should be noted at this point that the the Kinect's depth sampling performance falls quadratically with the distance from the lens. See the section below on spatial resolution errors.) Given the Kinect sampling rate is 30 Hz, the Nyquist theorem predicts the frequency of the finger moving must not exceed 15 Hz (called the Nyquist frequency). That's an awfully fast finger, and so we can safely use the Kinect to sample this motion. In fact, any other kind of periodic motion of the body (swinging an arm in a large circle, jumping up and down) will happen at a frequency far less than the Nyquist frequency.

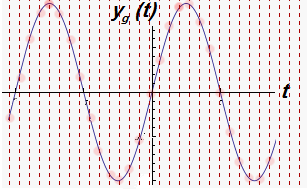

The image below shows how a periodic motion at a frequency less than the 15Hz can be reconstructed from Kinect sampling data.

Temporal aliasing of aperiodic, nonmonotonic motion

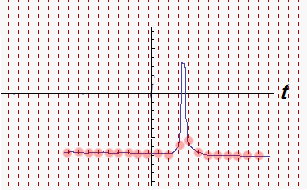

However, the human body can do very fast, nomonotonic, aperiodic movement, which is susceptible to temporal aliasing. The most obvious example is a punch by a highly-skilled martial artist. (The motion capture technician at Emily Carr, Rick Overington, has reported this to be true at their own facilities.) It may be difficult to capture the movements of this guy, for example: http://www.youtube.com/watch?v=qdSY-_qs_mg

This phenomenon can also be understood in the context of the Nyquist theorem. Any movement gesture or phrase can be seen as a finite signal in 3 dimensions that is decomposable into a Fourier series. In the case of this example of a martial arts punch, one of the components of series is a high-amplitude signal with a frequency that greater than the Nyquist frequency. (An example of a movement that contains a low-amplitude signal with a high frequency might be very strong shivering.) This component will be aliased upon reconstructed from the sampled signal. And since this component is high amplitude and thus critical to our perception of the movement, an aliased reconstruction of the movement will be perceptually significantly different from the original gesture. The movement will be "smoothed out", appearing less jerky than it really is.

In fact, the usefulness of the Kinect for sampling needs to be closely paid attention to for any movement that contains very rapid changes in velocity (which is the first derivative of position as a function of time) or acceleration (which is the second derivative). For instance, the expressivity of the urban dance form of popping hinges precisely on very rapid and sophisticated changes in acceleration. (This is a a playlist of popping videos from YouTube.)

Spatial resolution errors

Khoshelham (2011) reports that random error of Kinect depth measurements increases quadratically with increasing distance from the sensor. The maximum random error is 4 cm. Khoshelham concludes that at a distance beyond the optimal distance of 1-3 meters, the quality of the data is degraded by noise and low spatial resolution. Keep this in mind when you plan your motion capture activities with the Kinect. For more experimental results on Kinect's spatial resolution, see also Smisek, Jancosek, & Pajdla (2011).

Occlusion errors

If you are using the Microsoft SDK to perform skeleton tracking, know that the SDK can sometimes infer joint positions when the joint is occluded (Fernandez, 2011). You can query the SDK on the quality of the skeleton data. Refer to this video find out more about how to do this. The SDK allows you to apply filtering to smooth out "skeleton jitter" (Fernandez, 2011), but you will lose movement information through this smoothing. Whether the loss is significant depends on how you need to use the motion data.

References

Khoshelham, K. (2011). Accuracy analysis of kinect depth data. ISPRS Workshop Laser Scanning (Vol. 38, p. 1). Retrieved December 16, 2011, from http://www.isprs.org/proceedings/XXXVIII/5-W12/Papers/ls2011_submission_40.pdf

Fernandez, D. (2011, June 16). Skeletal Tracking Fundamental. Kinect for Windows SDK Quickstarts. Retrieved December 16, 2011, from http://channel9.msdn.com/Series/KinectSDKQuickstarts/Skeletal-Tracking-Fundamentals#time=1m24s

Smisek, J., Jancosek, M., & Pajdla, T. (2011). 3D with Kinect. Presented at the 1st IEEE Workshop on Consumer Depth Cameras for Computer Vision, Barcelona, Spain. Retrieved from ftp://cmp.felk.cvut.cz/pub/cvl/articles/pajdla/Smisek-CDC4CV-2011.pdf