Sensor Selection

Contents

Introduction and a cautionary tale: Why you need to choose your motion capture system carefully

In Vancouver, Canada, exit doors on buses are marked bus with a sign, "Touch here". Passengers interpret this instruction differently. Some firmly press the door with the palm of their hand; sometimes they leave their hand on the door until the door opens, while other times, they remove their hand the moment right after it make contact with the door. Other passengers slap or punch the door, particularly then the doors don't respond to their gesture right away. The more impatient they become, the stronger and more frequent their punches get. (This happens frequently!)

However, the doors do not respond to pressure at all. Rather, an ultrasound sensor positioned at the top of the door senses an obstruction in an ultrasonic beam. The most efficient way for a passenger to open the door is either to slowly move their hand towards the door, or to place their hand on the sign until the door opens. If their hand approaches the door too quickly or if it is withdrawn too soon, the system fails to detect the hand's presence and the door remains shut.

The design of this system contravenes how humans have traditionally mobilized their bodies to open doors. Usually, the more direct force we apply to a door, the quicker it opens. This is a relationship that our bodies understand and that we apply to a wide variety of physical interactions with the world at large. The use of ultrasound sensors to interpret commuters' intention to exit a bus also illustrates how design decisions around the application of sensing technologies affect (for better or for worse) the way we move through an increasingly technology-intervened world. Other examples abound. We use our thumbs in a light, precise, staccato way to type out text on capacitive touch screens. We slow down when approaching secured doors so that infrared sensors can detect our presence and unlock the doors. When playing a dance game on the Microsoft Kinect, users need to orient their torsos to face the Kinect sensors directly to avoid body part occlusion, limiting the range of possible movements they can make.

Different sensors, different uses

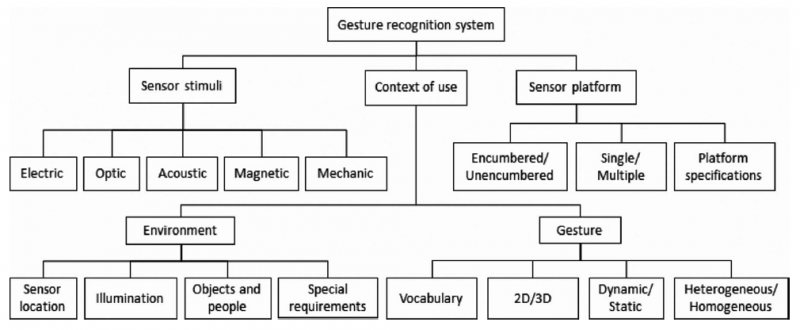

Typologies of sensors used for measuring movement exist in the research literature (J. K. Aggarwal & Park, 2004; Garg, N. Aggarwal, & Sofat, 2009; Gavrila, 1999; Mitra & Acharya, 2007; Pavlovic, Sharma, & T. S. Huang, 1997; Rowe, 2008; Wachs, Kölsch, Helman Stern, & Edan, 2011; Wu & T. Huang, 1999; Yilmaz, Javed, & Shah, 2006). In a detailed review that summarizes many of the findings from earlier reviews, Berman and Stern (2011) propose a typology of sensors (shown as Figure 1) for gesture recognition systems. Their typology is organized around the properties of the sensor technologies and features three parent categories: sensor stimuli, context of use, and sensor platform. They also provide guidelines for selecting which data type to measure based on “movement frequency” (i.e., the rate at which salient aspects of the movement occur):

- Low-frequency movement: position measurements

- Intermediate-frequency movement: velocity measurement

- High-frequency movement: acceleration measurements

The authors place a large emphasis on the use of optical methods for motion capture, asserting that “in order to become universally accepted, gesture interface[s] must satisfy the ‘come as you are’ requirement”, i.e., that moving subjects should be “unencumbered” (to use Berman and Stern’s terminology) with markers, sensors, transmitters, and any other devices on their body. However, this only holds true for a subset of human activity. There are many areas of human activity that require, benefit from, or naturally incorporate some kind of encumbrance or extension of the body: conducting music with a baton, wearing a glove while boxing, cutting bamboo using a machete, and flipping a pancake are but a few examples. When we consider human interaction with existing digital technology, we note the importance of the capacitive sensors on touch-based tablets, the pressure sensors in a touch-responsive electronic piano, and the accelerometers in the Apple iPod that, when shaken, randomly selects the next track to play. In fact, movement is the primary way by which we interact with the world, and touch the fundamental relationship that initiates this interaction (Moore, 1988; Thecla Schiphorst, 2008). Thus, a wide variety of sensors must be brought to bear upon the measurement of salient aspects human movement.

from Berman and Stern (2011)

On this page, we enumerate some existing sensor types and how these can be used towards movement analysis. Our review of sensor types places the embodied experience of movement as the central organizing concept. We thus include biometric sensors as part of this review, because we propose that they can be used to infer the qualia of moving when combined with conventional mechanisms for sensing movement.

Position-based sensors

A wide range of position-based sensors exist, but in essence, they all capture at a given moment the position of the body in space. The types of stimuli used by position-based sensors include electric, optical (including IR and near-IR stimuli), acoustic, and magnetic systems (Berman & H. Stern, 2011) They may require the subject to wear or hold specialized equipment, or they may leave the subject unencumbered, in which case the subject needs to be separated from the background through segmentation.

Gyroscopes and magnenometers can also be used in concert to measure roll, pitch, and yaw with respect to the earth"s gravitational and magnetic fields as frames of reference.

Acceleration-based sensors

Two types of acceleration sensors are commercially available.

Linear accelerometers measure acceleration along a single spatial dimension. Three accelerometers can be oriented orthogonal to each other in order to measure acceleration in three dimensions. The Nintendo Wiimote's acceleration sensing system, for example, is built on the ADXL330, a 3-axis linear accelerometer (Analog Devices, 2007; Wisniowski, 2006). Texas Instruments produces EZ430-Chronos, a watch that uses the VTI CMA3000, another 3-axis accelerometer (VTI Technologies, n.d.; Texas Instruments, 2010).

Gyroscopes measure rotational acceleration. When the direction of gravity is known, gyroscopes can be used to measure pitch and roll. Another measure of rotational movement, yaw, can be derived if a device can sense its orientation with reference to the Earth"s magnetic fields. The iPhone 4 has a 3-axis linear accelerometer, a 2-axis gyroscope, and a magnetometer, providing six acceleration measurements as well as orientation information (Dilger, 2010). Earlier versions of the iPhone did not have a magnetometer and thus could not measure yaw (Sadun, 2007).

Yang and Hsu (2010) have summarized the uses of accelerometers in measuring physical activity. Accelerometers have been used to determine static postures (standing upright, lying down), postural transitions (standing, sitting, postural sway), and gait parameters (heel strike, gait cycle frequency, stride symmetry, regularity, step length, and gait smoothness). In combination with other sensors, accelerometers can also be used to infer falling (when combined with impact detection) and energy expenditure (particularly when combined with barometric sensors to determine changes in elevation). Higher-context knowledge can be generated through accelerometry, such as restfulness during sleep, which can be inferred from the number of postural transitions during the various sleep cycles (Yang & Hsu, 2010); by tracking energy expenditure, we might also be able to infer fatigue.

Accelerometers are particularly good at detecting abrupt and frequent changes in velocity, compared to position-based sensors. While reconstructing positional information from acceleration is possible but prone to error (Giansanti, Macellari, Maccioni, & Cappozzo, 2003), we hypothesize that accelerometers are particularly adept for sensing data that are related to changes in movement quality.

Sensors that measure the amount of contact or pressure on a surface are a special case of acceleration- or velocity-based sensors. Pressure can be thought of as the outcome of impeded movement: when a finger attempts to push past a screen, the screen stops it from moving.

Other sensor types

This is a work in progress. Other sensor types will be discussed here.

Sensor-based considerations in LMA recognition

Different types of sensors are able to measure different low-context properties of human movement, such as the position, velocity, and acceleration of specific body parts. By applying computational techniques to these measurements, we can infer higher-context properties, such as gait information (stride length, walking speed), postural changes (falling, standing up), and energy expenditure. What has been underexplored is how these can properties can be used to recognize higher-context qualities of human movement for creating a semantics of expressive motion. One such semantic framework is Laban Movement Analysis (LMA). In the table below, we enumerate some existing sensor types and how these can be used towards movement analysis, and show they could be used towards the application of LMA. This table places the embodied experience of movement as the central organizing concept. We thus include biometric sensors as part of this review, because we propose that they can be used to infer the qualia of moving when combined with conventional mechanisms for sensing movement. The table headings also include the notion movement context, taken from Chapter 6 of Moore and Yamamoto (1988). In this chapter, the authors ask, "If we take the metaphor of movement is a language seriously, would human movement be a universal language, a foreign language, or a private code?" They propose that movement could be all three, depending on context. I propose that a hierarchical semantics of movement could be closely linked to the Moore and Yamamoto's notion of movement context.

|

|

|

|

|

|

|

|

Acceleration |

Gyroscopes |

iPhone, IDG500 dual-axis gyroscope, Wiimote MotionPlus |

Rotational accleration |

Postural transitions; gait information (Yang and Hsu, 2010); linearity, planarity, periodicity (based on the work by Mary Pietrowicz at UIUC) |

A prototype for recognizing LMA Effort using accelerometers is being developed by the Institute for Advanced Computing Applications and Technologies at the University of Illinois and the University of Illinois Dance Department, with the expertise of movement analyst Sarah Hook from the Dance Department, and in collaboration with Dr. Thecla Schiphorst (Subyen, Maranan, Schiphorst, Pasquier, & Bartram, 2011). |

|

Accelerometers |

Mobile phones (other than iPhone), Analog Devices triple axis ADXL335, Wiimote |

Linear acceleration | |||

|

Touch |

Pressure sensors |

Tactex |

Pressure |

LMA recognition of has been applied to touch-based interfaces in interactive art (T. Schiphorst, Lovell, & Jaffe, 2002). | |

|

Position |

Vision |

Camera and webcams, icon, Kinect, Wiimote IR camera, IR-based motion capture systems |

Position of body segments |

Postural information; anything that can be inferred from acceleration sensors |

Recognition of some aspects of Space, Space, and Effort categories have been reported (J. Rett, J. Dias, & Ahuactzin, 2008; J. Rett, Santos, & J. Dias, 2008; Jorg Rett & Jorge Dias, 2007a, 2007b; Santos, Prado, & J. Dias, 2009; Santos et al., 2009; Swaminathan et al., 2009; L. Zhao, 2001; Liwei Zhao & Badler, 2005). |

|

Magnetic |

|||||

|

Infrared |

|||||

|

Biometric |

Eye-tracking |

Gaze |

Visual attention; intent |

Muscular tension is related to Effort Weight. Attention and intent are key themes in Effort Space. We propose that arousal can be affined to the extent by which a mover uses "fighting" qualities over "indulging" qualities. | |

|

GSR |

Electrical conductance of the skin |

Arousal | |||

|

Breath sensors |

Rate of breathing; volume of inspiration/expiration |

Arousal; energy expenditure | |||

|

EMG |

Electrical activity produced by skeletal muscles |

Muscular tension | |||

|

Heart rate sensors |

Heart rate |

Arousal; level of physical activity |

References

- Aggarwal, J. K., & Park, S. (2004). Human motion: Modeling and recognition of actions and interactions.

- Analog Devices. (2007). ADXL330: Small, Low Power, 3-Axis "3g iMEMS" Accelerometer. Retrieved from http://www.analog.com/static/imported-files/data_sheets/ADXL330.pdf

- Berman, S., & Stern, H. (2011). Sensors for Gesture Recognition Systems. IEEE Transactions on Systems, Man, and Cybernetics, Part C: Applications and Reviews, PP(99), 1-14. doi:10.1109/TSMCC.2011.2161077

- Dilger, D. E. (2010, June 16). Inside iPhone 4: Gyro spins Apple ahead in gaming [Page 2]. AppleInsider. Retrieved August 22, 2011, from http://www.appleinsider.com/articles/10/06/16/inside_iphone_4_gyro_spins_apple_ahead_in_gaming.html&page=2

- Garg, P., Aggarwal, N., & Sofat, S. (2009). Vision Based Hand Gesture Recognition. World Academy of Science, Engineering and Technology, 49, 972"977.

- Gavrila, D. M. (1999). The Visual Analysis of Human Movement: A Survey. Computer vision and image understanding, 73(1), 82"98.

- Giansanti, D., Macellari, V., Maccioni, G., & Cappozzo, A. (2003). Is it feasible to reconstruct body segment 3-D position and orientation using accelerometric data? IEEE Transactions on Biomedical Engineering, 50(4), 476-483. doi:10.1109/TBME.2003.809490

- Mitra, S., & Acharya, T. (2007). Gesture Recognition: A Survey. IEEE Transactions on Systems, Man, and Cybernetics, Part C: Applications and Reviews, 37(3), 311-324. doi:10.1109/TSMCC.2007.893280

- Moore, C.-L., & Yamamoto, K. (1988). Beyond Words: Movement Observation and Analysis. New York, N.Y., U.S.A: Gordon and Breach Science Publishers.

- Nakata, T., Mori, T., & Sato, T. (2002). Analysis of impression of robot bodily expression. Journal of Robotics and Mechatronics, 14(1). Retrieved from http://staff.aist.go.jp/toru-nakata/LabanEng.pdf

- Pavlovic, V. I., Sharma, R., & Huang, T. S. (1997). Visual interpretation of hand gestures for human-computerinteraction: a review. IEEE Transactions on Pattern Analysis and Machine Intelligence, 19(7), 677-695. doi:10.1109/34.598226

- Rett, J., Dias, J., & Ahuactzin, J. M. (2008). Laban Movement Analysis using a Bayesian model and perspective projections. In C. Rossi (Ed.), Brain, Vision and AI (pp. 183-210). Vienna: InTech Education and Publishing.

- Rett, J., Santos, L., & Dias, J. (2008). Laban Movement Analysis for multi-ocular systems. Intelligent Robots and Systems, 2008. IROS 2008. IEEE/RSJ International Conference on (pp. 761-766). Presented at the Intelligent Robots and Systems, 2008. IROS 2008. IEEE/RSJ International Conference on.

- Rett, Jorg, & Dias, Jorge. (2007a). Bayesian models for Laban Movement Analysis used in human machine interaction. Proceedings of ICRA 2007 Workshop on "Concept Learning for Embodied Agents." Retrieved from http://paloma.isr.uc.pt/pub/bscw.cgi/d56530/Paper@ICRA07-WS.pdf

- Rett, Jorg, & Dias, Jorge. (2007b). Human-robot interface with anticipatory characteristics based on Laban Movement Analysis and Bayesian models. 2007 IEEE 10th International Conference on Rehabilitation Robotics (pp. 257-268). Presented at the 2007 IEEE 10th International Conference on Rehabilitation Robotics, Noordwijk, Netherlands. doi:10.1109/ICORR.2007.4428436

- Rowe, D. (2008). Towards Robust Multiple-Target tracking in Unconstrained Human-Populated Environments. Department of Computer Science UAB and Computer Vision Center, Barcelona, Spain.

- Sadun, E. (2007, September 10). iPhone Coding: Using the Accelerometer. TUAW - The Unofficial Apple Weblog. Retrieved August 22, 2011, from http://www.tuaw.com/2007/09/10/iphone-coding-using-the-accelerometer/

- Santos, L., Prado, J. A., & Dias, J. (2009). Human Robot interaction studies on Laban human movement analysis and dynamic background segmentation (pp. 4984-4989). Presented at the IEEE/RSJ International Conference on Intelligent Robots and Systems 2009, St. Louis, MO. doi:10.1109/IROS.2009.5354564

- Schiphorst, T., Lovell, R., & Jaffe, N. (2002). Using a gestural interface toolkit for tactile input to a dynamic virtual space. Conference on Human Factors in Computing Systems (pp. 754"755).

- Schiphorst, Thecla. (2008). Bridging embodied methodologies from somatics and performance to human computer interaction (Ph.D. dissertation). School of Computing, Communications and Electronics, Faculty of Technology, University of Plymouth, United Kingdom. Retrieved from http://www.sfu.ca/~tschipho/PhD/PhD_thesis.html

- Subyen, P., Maranan, D. S., Schiphorst, T., Pasquier, P., & Bartram, L. (2011). EMVIZ: The Poetics of Movement Quality Visualization. Proceedings of Computational Aesthetic 2011 Eurographics Workshop on Computational Aesthetics in Graphics, Visualization and Imaging. Presented at the Computational Aesthetics, Vancouver, Canada.

- Swaminathan, D., Thornburg, H., Mumford, J., Rajko, S., James, J., Ingalls, T., Campana, E., et al. (2009). A dynamic Bayesian approach to computational Laban shape quality analysis. Advances in Human Computer Interaciton, 2009, 1-17.

- VTI Technologies. (n.d.). CMA3000-D01 3-Axis Ultra Low Power Accelerometer with Digital SPI and I2C Interface. VTI Technologies. Retrieved from http://www.vti.fi/midcom-serveattachmentguid-1e05eb0417155de5eb011e0b896954a036ddb89db89/cma3000_d01_datasheet_8277800a.03.pdf

- Texas Instruments. (2010, December). eZ430-ChronosTM Development Tool User"s Guide. Texas Instruments. Retrieved from http://www.ti.com/lit/ug/slau292c/slau292c.pdf

- Wachs, J. P., K"lsch, M., Stern, Helman, & Edan, Y. (2011). Vision-based hand-gesture applications. Communications of the ACM, 54(2), 60. doi:10.1145/1897816.1897838

- Wisniowski, H. (2006, May 9). Analog Devices And Nintendo Collaboration Drives Video Game Innovation With iMEMS Motion Signal Processing Technology. Analog Devices, Inc. Retrieved August 22, 2011, from http://www.analog.com/en/press-release/May_09_2006_ADI_Nintendo_Collaboration/press.html

- Wu, Y., & Huang, T. (1999). Vision-based gesture recognition: A review. Gesture-based communication in human-computer interaction, 103"115.

- Yang, C. C., & Hsu, Y. L. (2010). A review of accelerometry-based wearable motion detectors for physical activity monitoring. Sensors, 10(8), 7772"7788.

- Yilmaz, A., Javed, O., & Shah, M. (2006). Object tracking. ACM Computing Surveys, 38(4), 13-es. doi:10.1145/1177352.1177355

- Zhao, L. (2001). Synthesis and acquisition of laban movement analysis qualitative parameters for communicative gestures (Ph.D. dissertation). University of Pennsylvania, Philadelphia, PA, USA.

- Zhao, Liwei, & Badler, N. (2005). Acquiring and validating motion qualities from live limb gestures. Graphical Models, 67(1), 1-16. doi:10.1016/j.gmod.2004.08.002